Statistical Laboratory

Probability Seminars

Easter Term 2002

All interested are encouraged

to take part to the full by presenting their ideas and discussing

those of others.

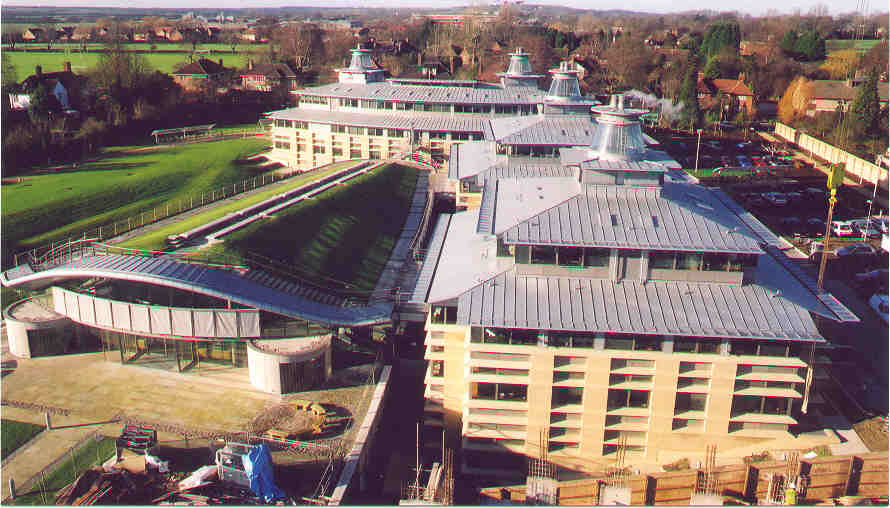

Talks will be in Meeting Room 12 of the

Centre for Mathematical Sciences, Pavilion D.

Tuesday 30 April

2pm Yuri Suhov

Quantum entropy and quantum coding

Abstract. In classical information coding, we want

to encode `messages' $x_1^n$ $=$ $(x_1,\ldots ,x_n)$,

of length $n\to\infty$,

generated by a `source' represented by an ergodic

process $X_{-\infty}^\infty$ with values from a

`source alphabet' $V$ and probabilities $p_n(x_1^n)$ $=$

${\mathbf P}(X_1^n=x_1^n)$. A code (of length $N$) is a map

$x_1^n\in V^n\mapsto y_1^N\in W^N$ where $W$ is a `code alphabet'

(a typical example is where $V$ is the Latin alphabet

and $W$ $=$ $\{0,1\}$). One wants to have a code

of a shortest length $N(n)$ where

possible encoding errors

(when two different messages are identically encoded)

occur with asymptotically small probability. That is,

we want to extract a subset of messages $D_n\subset V^n$

of high probability $p_n(D_n)$ and low

cardinality $\#D_n$ (two opposite tasks).

We say that $h\geq 0$ is the

information rate of source $X_{-\infty}^\infty$ if

$\forall$ $R>h$ there exists a sequence $D_n\subset V^n$

such that $\#D_n\leq 2^{Rn}$ and $p_n(D_n)\to 1$; the value $h$

coincides with the Shannon (or Kolmogorov--Sinai) entropy

rate of $X_{-\infty}^\infty$. The shortest length $N(n)$

has $N(n)/n$ $\sim$ $h$.

In the talk, I will focus on a quantum analogue

of the coding problem.

I'll present various results in this direction, without assuming

any serious knowledge of quantum mechanics or classical

information theory.

Tuesday 14 May

2pm Michail Loulakis (ETH)

Einstein Relation for a tagged particle in the simple exclusion process

Consider the symmetric simple exclusion process, an interacting particle system

which is diffusive. If we tag a particle and follow its evolution, its position

converges after rescaling to a diffusion, characterized by a matrix D. Now, if

the tracer particle is driven by a small force, then it picks up a mean

velocity. The mean velocity is proportional to the small force and the constant

of proportionality, in the limit, can be computed from the diffusion matrix D

of the tracer particle with no driving force. This relationship, some times

referred to as the Einstein Relation, is believed to be generally valid. The

difficulty of the problem lies in the fact that the equilibria of the simple

exclusion process with a driven tracer particle are not generally accessible.

Using tools from large deviations, we establish the validity of Einstein

Relation for all symmetric simple exclusion processes in dimension greater

than or equal 3.

Tuesday 21 May (Joint with the Combinatorics seminar)

2pm Alan Stacey

Percolation on finite graphs

Tuesday 28 May

2pm John Kingman

Probability in battles

Tuesday 18 June

2pm Shiri Artstein (Tel Aviv)

Entropy increases at every step

Abstract. If X_i are IID random variables then the entropy

of

1/\sqrt n \sum_1^n X_i

increases with n.

Thursday 18 July

2pm N. Ganikhodjaev and U. Rozikov (Tashkent University

and Institute of Mathematics, Uzbekistan)

Gibbs

random fields on Cayley trees

Abstract.

We consider models of Gibbs random fields

on a tree: Ising, Potts, SOS and their generalizations.

The picture on a tree is quite different from that on

a lattice. For example, a ferromagnetic nearest

neighbour Ising model

with zero field on a tree has one translation-invariant

Gibbs measure for high temperatures and three at and below

the critical temperature (equal to

$J({\rm{arctanh}}1/k)^{-1}$ where $k$ is the number

of branches descending from a single vertex and $J>0$

a coupling constant). Besides, at low temperatures

there exist a continuum

of non-translation-invariant extreme Gibbs measures.

Similar results hold for the Potts model.

The talk will be made accessible without

any preliminary knowledge of Gibbs measure theory.

Graduate students especially are urged to attend.

Directions to the Laboratory.

The CMS is reached by a path along the east

side of the Isaac Newton Institute in Clarkson Road.

![]() to return to Geoffrey Grimmett's home page.

to return to Geoffrey Grimmett's home page.